Are you sure you want to delete this task? Once this task is deleted, it cannot be recovered.

|

|

1 year ago | |

|---|---|---|

| Figures | 1 year ago | |

| code | 1 year ago | |

| one-key evaluation | 1 year ago | |

| README.md | 1 year ago | |

| pcsod.yml | 1 year ago | |

README.md

Salient Object Detection for Point Clouds (ECCV 2022)

Citation:

Songlin Fan, Wei Gao*, Ge Li, "Salient Object Detection for Point Clouds," ECCV 2022.

Contact:

Please contact slfan@pku.edu.cn if having any questions.

🔥 NEWS

- [2022/07/24] 💥Add one-key evaluation toolbox.

- [2022/07/04] Create the project.

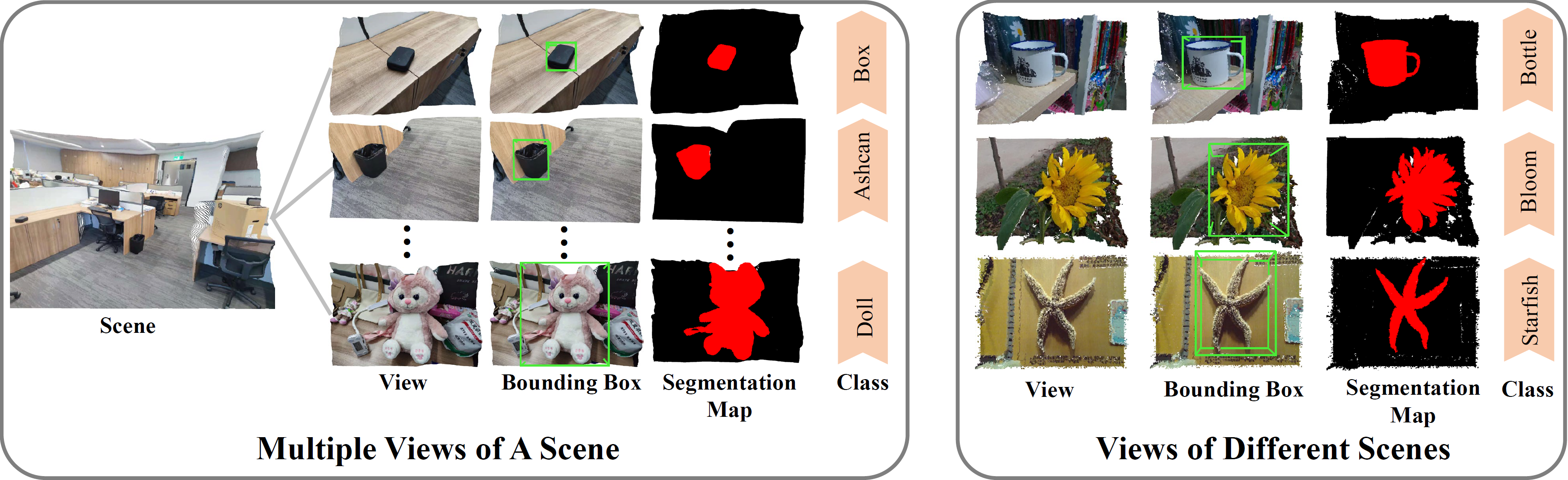

Figure 1: Illustration of point cloud salient object detection.

Catalog

Abstract

This paper researches the unexplored task-point cloud salient object detection (SOD). Differing from SOD for images, we find the attention shift of point clouds may provoke saliency conflict, i.e., an object paradoxically belongs to salient and non-salient categories. To eschew this issue, we present a novel view-dependent perspective of salient objects, reasonably reflecting the most eye-catching objects in point clouds. Following this formulation, we introduce PCSOD, the first dataset proposed for point cloud SOD consisting of 2,872 in-/out-door 3D views. The samples in our dataset are labeled with hierarchical annotations, e.g., super-/sub-class, bounding box, and segmentation map, which endows the brilliant generalizability and broad applicability of our dataset verifying various conjectures. To evidence the feasibility of our solution, we further contribute a baseline model and benchmark five representative models for comprehensive comparisons. The proposed model can effectively analyze irregular and unordered points for detecting salient objects. Thanks to incorporating the task-tailored designs, our method shows remarkable superiority over other baseline models. Extensive experiments and discussions reveal the promising potential of this research field, paving the way for further study.

PCSOD dataset

Usage of our dataset is under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License.

Usage of our dataset is under the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International Public License.

Figure 2: Visual examples from our PCSOD dataset with hierarchical annotations.

| Dataset | Year | Pub. | Size | #training | #Testing | Download |

|---|---|---|---|---|---|---|

| PCSOD | 2022 | ECCV | 2872 | 2000 | 872 | OpenDatasets |

Note: 3D views and annotated segmentation maps are .ply files, while bounding boxes are .npy files containing their (x,y,z) coordinates of the eight vertices in space.

Reproduction

First, prepare your Anaconda environment following the file named “pcsod.yml”. Then, download our PCSOD dataset from OpenDatasets and the trained model from Baidu Pan [code: skto], and put them under the following directory:

code\

├── Data\

│ ├── train\

| └── test\

├── log\

| ├── pcsod\

| ├── logs\

| └── checkpoints\

| └── best_model.pth

├── train.py

├── test.py

└──...

Test our model while saving predictions. For a convenient comparison, you can also obtain our off-shelf predictions from Baidu Pan [code: skto]. We recommend using CloudCompare to browse our dataset, as well as the predictions.

python test.py --log_dir pcsod

Retrain our model

python train.py

One-key Evaluation

A One-key point cloud SOD evaluation toolbox adapted from the image-based version Evaluate SOD. Put the predictions and groundtruths under the following directory:

one-key evaluation\

├── gt\

│ ├── PCSOD\

│ ├── test_0000.ply

│ └── test_0001.ply

│

└── pred\

└── PointNet\

├── PCSOD\

│ ├── test_0000.ply

│ └── test_0001.ply

├── main.py

└──...

Usage:

python main.py

Benchmark

This will be a constantly updated benchmark. Note that the performance of methods here may vary slightly from that in our paper, which results from the conversion from 4-byte '.npy' to 8-byte '.ply'.

| Method | Pub. | MAE | F-measure | E-measure | IoU | Results |

|---|---|---|---|---|---|---|

| PointSal (Ours) | ECCV'22 | .068 | .772 | .853 | .658 | Baidu Pan [code: skto] |

| PointNet | CVPR'17 | .116 | .634 | .770 | .520 | Baidu Pan [code: skto] |

| PointNet2 | NeurIPS'17 | .077 | .741 | .817 | .610 | Baidu Pan [code: skto] |

| PointCNN | NeurIPS'18 | .150 | .244 | .412 | .152 | Baidu Pan [code: skto] |

| ShellNet | ICCV'19 | .073 | .756 | .850 | .650 | Baidu Pan [code: skto] |

| RandLA | TPAMI'21 | .127 | .635 | .742 | .519 | Baidu Pan [code: skto] |

Citation

Please cite our paper if you find our work is helpful.

@inproceedings{fan2022salient,

title={Salient Object Detection for Point Clouds},

author={Fan, Songlin and Gao, Wei and Li, Ge},

booktitle={European Conference on Computer Vision},

pages={1--19},

year={2022},

organization={Springer}

}

Acknowledgement

Our codes are built upon PointNet, RandLA-Net, and Evaluate SOD.

Salient Object Detection for Point Clouds, ECCV 2022

Python Text