Are you sure you want to delete this task? Once this task is deleted, it cannot be recovered.

|

|

10 months ago | |

|---|---|---|

| CAE.png | 10 months ago | |

| Checkerboard-Context-Model.png | 10 months ago | |

| Cheng2020Attention.png | 10 months ago | |

| Coarse2Fine.png | 10 months ago | |

| Imgcomp.png | 10 months ago | |

| LICENSE | 10 months ago | |

| README.md | 10 months ago | |

| bmshj2018.png | 10 months ago | |

| e2e_gdn.png | 10 months ago | |

| iwave.png | 10 months ago | |

README.md

- OpenDIC

-

- Contact and References

- List of Contributors

- Table of Content

- 1.1 Coarse2Fine (by Chenhao Zhang, Hua Ye)

- 1.2 Cheng2020Attention (by Chenhao Zhang, Hua Ye)

- 1.3 Checkerboard-Context-Model (by Chenhao Zhang, Hua Ye)

- 1.4 e2e_gdn (by Kaiyu Zheng, Hua Ye)

- 1.5 CAE (by Zhuozhen Yu, Hua Ye)

- 1.6 Imgcomp (by Zhuozhen Yu, Hua Ye)

- 1.7 bmshj2018 (by Kaiyu Zheng, Yongchi Zhang)

- 1.8 iwave (by Kaiyu Zheng, Yongchi Zhang)

OpenDIC

OpenDIC is An Open-Source Algorithm Library of Deep-learning-based Image Compression (DIC). We collect methods on DIC, provide source codes of MindSpore, PyTorch or TensorFlow, and test their performances.

Contact and References

Coordinator: Asst. Prof. Wei Gao (Shenzhen Graduate School, Peking University)

Should you have any suggestions for better constructing this open source library, please contact the coordinator via Email: gaowei262@pku.edu.cn. We welcome more participants to submit your codes to this collection, and you can send your OpenI ID to the above Email address to obtain the accessibility.

List of Contributors

Contributors:

Asst. Prof. Wei Gao (Shenzhen Graduate School, Peking University)

Mr. Hua Ye (Peng Cheng Laboratory)

Mr. Yongchi Zhang (Peng Cheng Laboratory)

Mr. Zhuozhen Yu (Shenzhen Graduate School, Peking University)

Mr. Chenhao Zhang (Shenzhen Graduate School, Peking University)

Mr. Kaiyu Zheng (Shenzhen Graduate School, Peking University)

etc.

Table of Content

1.1 Coarse2Fine (by Chenhao Zhang, Hua Ye)

1.2 Cheng2020Attention (by Chenhao Zhang, Hua Ye)

1.3 Checkerboard-Context-Model (by Chenhao Zhang, Hua Ye)

1.4 e2e_gdn (by Kaiyu Zheng, Hua Ye)

1.5 CAE (by Zhuozhen Yu, Hua Ye)

1.6 Imgcomp (by Zhuozhen Yu, Hua Ye)

1.7 bmshj2018 (by Kaiyu Zheng, Yongchi Zhang)

1.8 iwave (by Kaiyu Zheng, Yongchi Zhang)

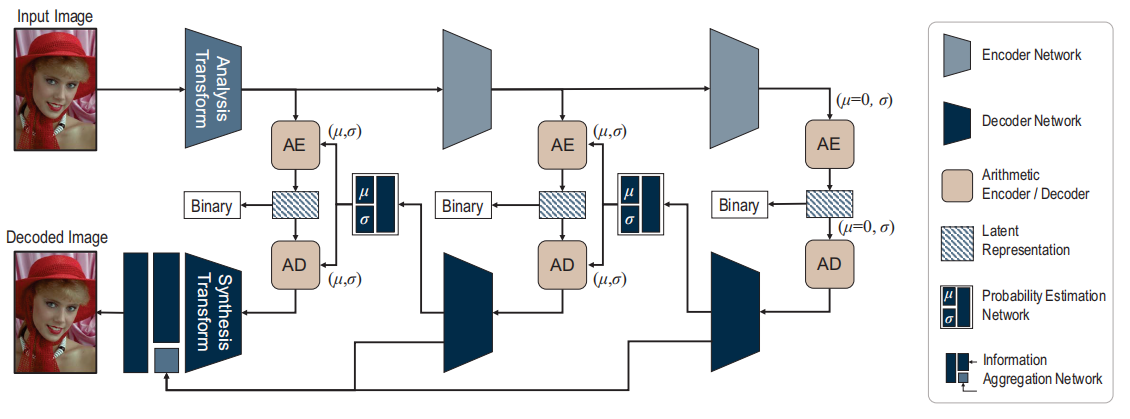

1.1 Coarse2Fine (by Chenhao Zhang, Hua Ye)

- 2020 AAAI Conference on Artificial Intelligence, Hyper-Prior Model.

- This paper proposes a coarse-to-fine framework with hierarchical layers of hyper-priors to conduct comprehensive analysis of the image and more effectively reduce spatial redundancy, which improves the rate-distortion performance of image compression significantly.

- Code in the framework of pytorch & mindspore are available.

- For more information, please go to Coarse2Fine.

Figure 1: Overall architecture of the multi-layer image compression framework, from Ref. [Hu, Y., Yang, W., & Liu, J. (2020). Coarse-to-Fine Hyper-Prior Modeling for Learned Image Compression. Proceedings of the AAAI Conference on Artificial Intelligence, 34(07), 11013-11020.]

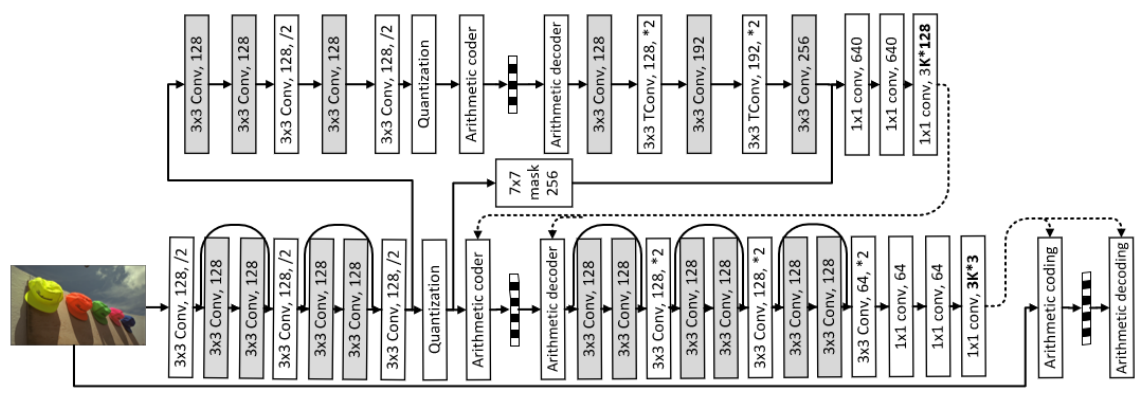

1.2 Cheng2020Attention (by Chenhao Zhang, Hua Ye)

- ICASSP 2020, HyperPrior

- This paper generalizes the hyperprior from lossy model to lossless compression, and proposes a L2-norm term into the loss function to speed up training procedure. Besides, this paper also investigated different parameterized models for latent codes, and propose to use Gaussian mixture likelihoods to achieve adaptive and flexible context models.

- Code in the framework of pytorch & mindspore are available.

- For more information, please go to Cheng2020Attention.

Figure 2: Network architecture of lossless image compression, from Ref. [Cheng Z, Sun H, Takeuchi M, et al. Learned lossless image compression with a hyperprior and discretized gaussian mixture likelihoods[C]//ICASSP 2020-2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2020: 2158-2162.]

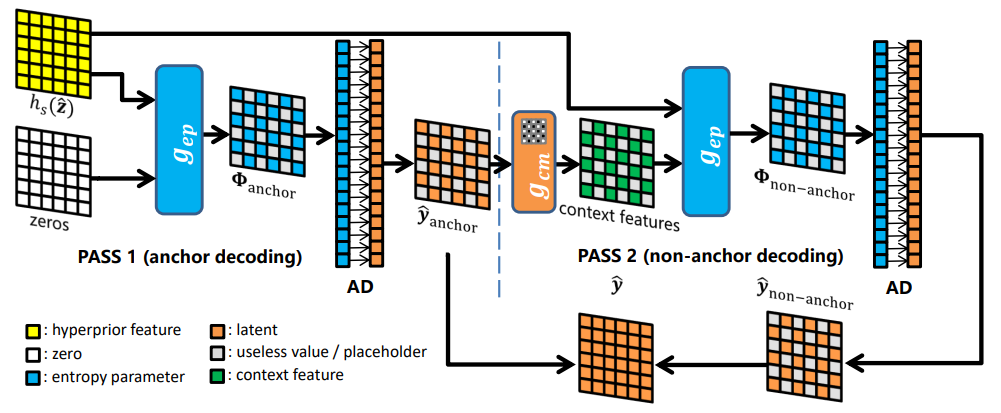

1.3 Checkerboard-Context-Model (by Chenhao Zhang, Hua Ye)

- CVPR 2021

- In this paper, the two-pass checkerboard context calculation eliminates the decoding limitations on spatial locations by re-organizing the decoding order. Speeding up the decoding process more than 40 times in our experiments, it achieves significantly improved computational efficiency with almost the same rate-distortion performance.

- Code in the framework of pytorch & mindspore are available.

- For more information, please go to Checkerboard-Context-Model.

Figure 3: Illustration of the proposed two-pass decoding, from Ref. [He D, Zheng Y, Sun B, et al. Checkerboard context model for efficient learned image compression[C]//Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021: 14771-14780.]

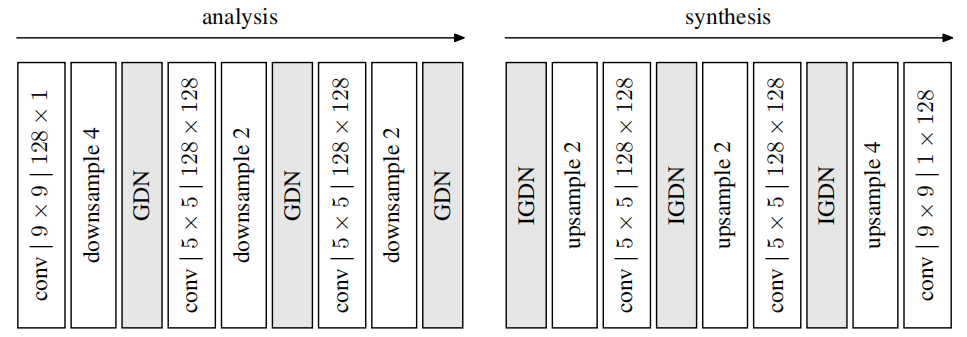

1.4 e2e_gdn (by Kaiyu Zheng, Hua Ye)

- ICLR 2017

- This paper describes an image compression method, consisting of a nonlinear analysis transformation, a uniform quantizer, and a nonlinear synthesis transformation. The transforms are constructed in three successive stages of convolutional linear filters and nonlinear activation functions.

- Code in the framework of pytorch & mindspore are available.

- For more information, please go to e2e_gdn.

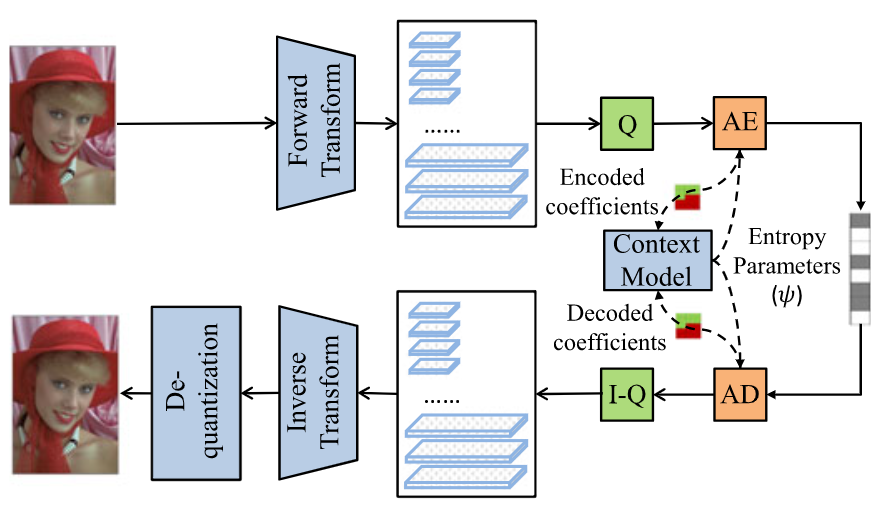

Figure 4: Network structure of e2e_gdn, from Ref. [Ballé J, Laparra V, Simoncelli E P. End-to-end optimized image compression[J]. arXiv preprint arXiv:1611.01704, 2016.]

1.5 CAE (by Zhuozhen Yu, Hua Ye)

- ICLR 2017

- This paper proposes a new approach to the problem of optimizing autoencoders for lossy

image compression. - Code in the framework of pytorch & mindspore are available.

- For more information, please go to CAE.

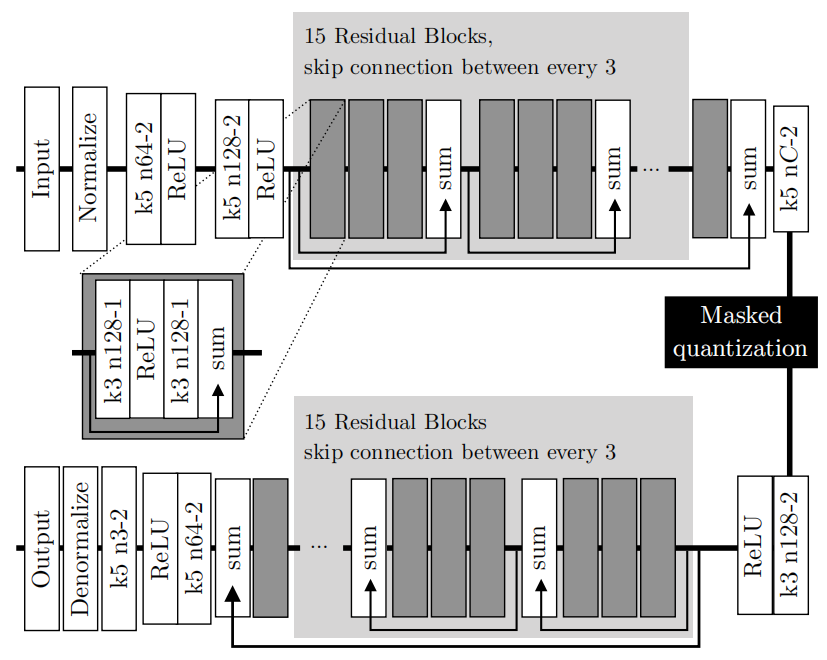

Figure 5: Illustration of the compressive autoencoder architecture used in this paper, from Ref. [Theis L, Shi W, Cunningham A, et al. Lossy image compression with compressive autoencoders[J]. arXiv preprint arXiv:1703.00395, 2017.]

1.6 Imgcomp (by Zhuozhen Yu, Hua Ye)

- CVPR 2018

- This paper proposes a new technique to navigate the rate-distortion trade-off for an image compression auto-encoder. The main idea is to directly model the entropy of the latent representation by using a context model: A 3D-CNN which learns a conditional probability model of the latent distribution of the auto-encoder.

- Code in the framework of tensorflow & mindspore are available.

- For more information, please go to Imgcomp.

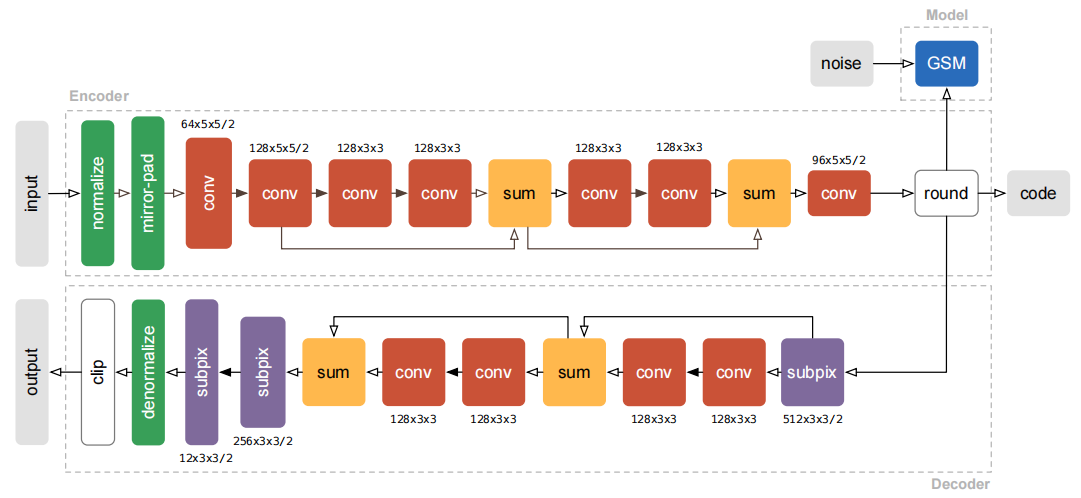

Figure 6: The architecture of auto-encoder, from Ref. [Mentzer F, Agustsson E, Tschannen M, et al. Conditional probability models for deep image compression[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 4394-4402.]

1.7 bmshj2018 (by Kaiyu Zheng, Yongchi Zhang)

- ICLR 2018

- This paper proposes an end-to-end trainable model for image compression based on variational autoencoders. It performs better than artificial neural networks based methods in compressing images on visual quality measurements like MS-SSIM and PSNR.

- Code in the framework of pytorch & mindspore are available.

- For more information, please go to bmshj2018.

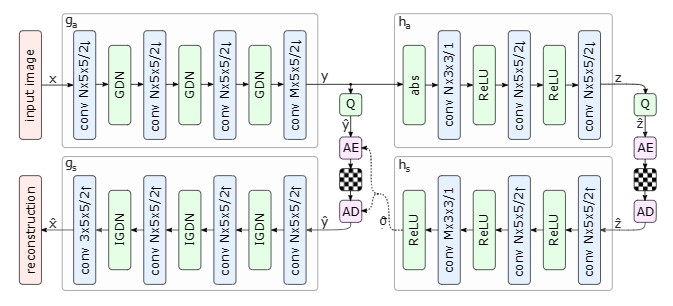

Figure 7: Network architecture of the bmshj2018-hyperprior, from Ref. [Ball{'e}, Johannes and Minnen, et al. Variational image compression with a scale hyperprior[C]. International Conference on Learning Representations, 2018.]

1.8 iwave (by Kaiyu Zheng, Yongchi Zhang)

- TPAMI 2022

- This paper proposes a new end-to-end optimized image compression scheme, in which iwave, a trained wavelet-like transform, converts images into coefficients without any information loss.

- Code in the framework of pytorch & mindspore are available.

- For more information, please go to iwave.

Figure 8: The architecture of iwave, from Ref. [Ma, Haichuan and Liu, et al. End-to-end optimized versatile image compression with wavelet-like transform[J]. IEEE Transactions on Pattern Analysis and Machine Intelligence, 2022]

Top summary of Deep-learning-based Image Compression

other

Apache-2.0