Deleting a branch is permanent. It CANNOT be undone. Continue?

Dear OpenI User

Thank you for your continuous support to the Openl Qizhi Community AI Collaboration Platform. In order to protect your usage rights and ensure network security, we updated the Openl Qizhi Community AI Collaboration Platform Usage Agreement in January 2024. The updated agreement specifies that users are prohibited from using intranet penetration tools. After you click "Agree and continue", you can continue to use our services. Thank you for your cooperation and understanding.

For more agreement content, please refer to the《Openl Qizhi Community AI Collaboration Platform Usage Agreement》

您好,刚发现机器翻译在NPU服务器下推理时缺少predict.py文件,麻烦提供一下

我试着用其他python_*.py文件代替,还报下面这种错误,猜测是有些包过期导致的,ImportError: cannot import name '_executor' from 'mindspore.common.api' Traceback (most recent call last):

File "/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/scripts/../predict-langs-zh.py", line 31, in

from src.serialization import load_distributed_checkpoint

File "/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/src/serialization.py", line 40, in

from mindspore.common.api import _executor

ImportError: cannot import name '_executor' from 'mindspore.common.api' (/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/common/api.py)

我安装的mindspore-ascend是1.10版本,上述错误是因为这个引起的吗? 我看README说明文档说NPU的运行环境是MindSpore 1.3,必须1.3吗?大于1.3不可用吗?

这里是MindSpore1.7的版本,您可以参考使用

https://openi.pcl.ac.cn/PCL-Platform.Intelligence/AISynergy/src/branch/V2.0.0/examples/mPanGu

或者:https://openi.pcl.ac.cn/PCL-Platform.Intelligence/mPanGu-Alpha-53/src/branch/master/mPanGu_alpha_ms1.7

如果是加载项目提供的完整的单个模型,需要修改如下加载模型部分:

在mPanGu/predict.py, 137行

from mindspore.train.serialization import load_checkpoint, load_param_into_net

param_dict = load_checkpoint(local_ckpt_path)

load_param_into_net(eval_net, param_dict, strict_load=False)

我下午试了几次还会遇到各种问题,这次任务我不需要训练微调,只需要在910NPU上翻译推理就可以了,所以能提供我一份最简单的NPU推理demo代码示例吗?

按照您的方式修改...../AISynergy/src/branch/V2.0.0/examples/mPanGu/predict.py文件后,然后使用下行命令执行:

python -s predict.py --strategy_load_ckpt_path=/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/mPanGu_53-26b-128k-m1d128-22m1d24-exp0-strategy.ckptstrategy.ckpt --tokenizer_path=/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/ --load_ckpt_path=/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/ --load_ckpt_name=mPanGu_Alpha-53_fp16.ckpt --mode=2.6B --run_type=predict --param_init_type=fp16 --distribute=false --device_target=Ascend

会报下面的错:

[WARNING] ME(4134:281472764620000,MainProcess):2023-06-02-02:20:05.422.500 [mindspore/context.py:264] For 'context.set_context', the parameter 'variable_memory_max_size' is deprecated, and will be removed in a future version. Please use parameter 'max_device_memory' instead.

local_rank:0, start to run...

[WARNING] ME(4134:281472764620000,MainProcess):2023-06-02-02:20:05.423.152 [mindspore/nn/transformer/transformer.py:250] TransformerRecomputeConfig is recommended as the recompute configuration type.

Traceback (most recent call last):

File "predict.py", line 200, in

main()

File "predict.py", line 192, in main

model_predict, config = load_model(opt)

File "predict.py", line 87, in load_model

recompute=True)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/transformer.py", line 236, in init

vocab_emb_dp=vocab_emb_dp)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/transformer.py", line 84, in init

self._dp_mp_config = OpParallelConfig(data_parallel=data_parallel, model_parallel=model_parallel)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/op_parallel_config.py", line 112, in init

Validator.check_positive_int(data_parallel, "data_parallel")

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/_checkparam.py", line 274, in check_positive_int

return check_number(arg_value, 0, Rel.GT, int, arg_name, prim_name)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/_checkparam.py", line 171, in check_number

raise type_except(f"{prim_info} must be {arg_type.name} and must {rel_str}, "

ValueError: The 'data_parallel' must be int and must > 0, but got '0' with type 'int'.

然后我在代码中改成 data_parallel_num = 1 ,又会报错:

[WARNING] ME(4216:281472190786784,MainProcess):2023-06-02-02:24:44.73. [mindspore/context.py:264] For 'context.set_context', the parameter 'variable_memory_max_size' is deprecated, and will be removed in a future version. Please use parameter 'max_device_memory' instead.

local_rank:0, start to run...

[WARNING] ME(4216:281472190786784,MainProcess):2023-06-02-02:24:44.726. [mindspore/nn/transformer/transformer.py:250] TransformerRecomputeConfig is recommended as the recompute configuration type.

===config is: ==============================[PANGUALPHAConfig]==============================

--batch_size:1

--seq_length:1024

--vocab_size:128320

--hidden_size:2560

--num_layers:32

--num_heads:32

--eod_token:6

--post_layernorm_residual:False

--load_ckpt_path:/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/

--param_init_type:Float16

--dropout_rate:0.0

--compute_dtype:Float16

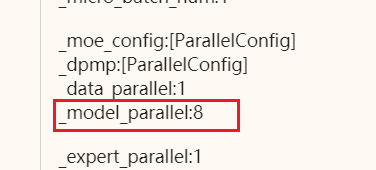

--parallel_config:[ParallelConfig]

_recompute:True

_optimizer_shard:False

_gradient_aggregation_group:4

_embed_dp_mp_config:[ParallelConfig]

_dp_mp_config:[ParallelConfig]

_data_parallel:1

_model_parallel:8

_vocab_emb_dp:True

_pp_config:[ParallelConfig]

_pipeline_stage:1

_micro_batch_num:1

_moe_config:[ParallelConfig]

_dpmp:[ParallelConfig]

_data_parallel:1

_model_parallel:8

_expert_parallel:1

--ffn_hidden_size:10240

--hidden_act:fast_gelu

--use_past:True

--eod_reset:False

--enable_offload:True

--softmax_compute_type:Float16

--use_moe:False

--expert_num:4

--per_token_num_experts_chosen:1

--run_type:train

--pad_token:6

=====args_opt is: Namespace(ckpt_name_prefix='pangu', data_column_name='input_ids', data_url='/cache/MindRecord_shuffle/', decay_steps=200000, device_id=0, device_num=1, device_target='Ascend', distribute='false', embedding_size=2560, enable_alltoall=0, end_lr=1e-06, end_token=9, eod_id=128298, eod_reset=1, epoch_size=1, eval_data_url=None, eval_steps=10, expert_num=1, expert_parallel_num=1, export=0, frequency_penalty=1.5, full_batch=1, gradient_aggregation_group=4, has_trained_epoches=0, has_trained_steps=0, hccl_connect_time=6000, incremental_training=0, keep_checkpoint_max=1, load_ckpt_name='mPanGu_Alpha-53_fp16.ckpt', load_ckpt_path='/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/', max_generate_length=500, micro_batch_interleaved=1, micro_size=1, mode='2.6B', ng_port='17290', num_heads=32, num_layers=32, offline=1, op_level_model_parallel_num=8, opt_offload=0, optimizer='adam', optimizer_shard=1, padding_id=128297, parallel_mode='data_parallel', param_init_type='fp16', per_batch_size=1, per_token_num_experts_chosen=1, pre_trained=None, presence_penalty=0.3, recompute_slice_activation=0, run_type='predict', save_checkpoint=False, save_checkpoint_path=None, save_checkpoint_steps=2000, seq_length=1024, sink_size=2, stage_num=1, start_lr=5e-05, strategy_load_ckpt_path='/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/mPanGu_53-26b-128k-m1d128-22m1d24-exp0-strategy.ckptstrategy.ckpt', tokenizer_path='/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/', top_k_num=3, top_p=1.0, train_and_eval_mode=0, train_url=None, use_moe=0, use_past='true', use_pynative_op=0, vocab_size=128320, warmup_step=2000, word_emb_dp=1)

[WARNING] ME(4216:281472190786784,MainProcess):2023-06-02-02:24:49.505.934 [mindspore/ops/primitive.py:207] The in_strategy of the operator in your network will not take effect in stand_alone mode. This means the the shard function called in the network is ignored.

If you want to enable it, please use semi auto or auto parallel mode by context.set_auto_parallel_context(parallel_mode=ParallelMode.SEMI_AUTO_PARALLEL or context.set_auto_parallel_context(parallel_mode=ParallelMode.AUTO_PARALLEL)

[WARNING] ME(4216:281472190786784,MainProcess):2023-06-02-02:24:50.567.88 [mindspore/common/_decorator.py:38] 'DropoutGenMask' is deprecated from version 1.5 and will be removed in a future version, use 'ops.Dropout' instead.

[WARNING] ME(4216:281472190786784,MainProcess):2023-06-02-02:24:50.571.45 [mindspore/common/_decorator.py:38] 'DropoutDoMask' is deprecated from version 1.5 and will be removed in a future version, use 'ops.Dropout' instead.

[WARNING] ME(4216:281472190786784,MainProcess):2023-06-02-02:24:50.609.50 [mindspore/common/parameter.py:599] This interface may be deleted in the future.

pipeline stage id is 0

Traceback (most recent call last):

File "predict.py", line 200, in

main()

File "predict.py", line 192, in main

model_predict, config = load_model(opt)

File "predict.py", line 114, in load_model

pangu_alpha = PanguAlphaModel(config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 390, in init

self.backbone = PanguAlpha_Model(config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 260, in init

softmax_compute_type=config.softmax_compute_type).blocks

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/log.py", line 625, in wrapper

res = func(*args, **kwargs)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/layers.py", line 67, in wrapper

return func(*args, **kwargs)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/transformer.py", line 2457, in init

offset=offset, parallel_config=parallel_config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 219, in set_parallel_configure_for_layer

if parallel_config.recompute.recompute:

AttributeError: 'bool' object has no attribute 'recompute'

还有哪些包需要修改吗?还是我执行时传的参数不对?

请描述您运行的环境信息,用多少卡进行的推理?

目前的推理只需要1卡,模型并行mp=1,数据并行dp=1,你这里配置应该还是不正确

运行环境是用下面这行命令从昇思官网拉到docker镜像,

docker pull swr.cn-south-1.myhuaweicloud.com/mindspore/mindspore-ascend:1.10.1 ,

mindspore-ascend是1.10版本,推理用了1张Ascend 910卡。

按照您说的,我修改mp=1, dp=1,运行后还是报了同样的错误,附件是修改之后的predict.py代码

[WARNING] ME(4513:281462233050336,MainProcess):2023-06-02-05:20:05.476.286 [mindspore/context.py:264] For 'context.set_context', the parameter 'variable_memory_max_size' is deprecated, and will be removed in a future version. Please use parameter 'max_device_memory' instead.

local_rank:0, start to run...

[WARNING] ME(4513:281462233050336,MainProcess):2023-06-02-05:20:05.476.939 [mindspore/nn/transformer/transformer.py:250] TransformerRecomputeConfig is recommended as the recompute configuration type.

===config is: ==============================[PANGUALPHAConfig]==============================

--batch_size:1

--seq_length:1024

--vocab_size:128320

--hidden_size:2560

--num_layers:32

--num_heads:32

--eod_token:6

--post_layernorm_residual:False

--load_ckpt_path:/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/

--param_init_type:Float16

--dropout_rate:0.0

--compute_dtype:Float16

--parallel_config:[ParallelConfig]

_recompute:True

_optimizer_shard:False

_gradient_aggregation_group:4

_embed_dp_mp_config:[ParallelConfig]

_dp_mp_config:[ParallelConfig]

_data_parallel:1

_model_parallel:1

_vocab_emb_dp:True

_pp_config:[ParallelConfig]

_pipeline_stage:1

_micro_batch_num:1

_moe_config:[ParallelConfig]

_dpmp:[ParallelConfig]

_data_parallel:1

_model_parallel:1

_expert_parallel:1

--ffn_hidden_size:10240

--hidden_act:fast_gelu

--use_past:True

--eod_reset:False

--enable_offload:True

--softmax_compute_type:Float16

--use_moe:False

--expert_num:4

--per_token_num_experts_chosen:1

--run_type:train

--pad_token:6

=====args_opt is: Namespace(ckpt_name_prefix='pangu', data_column_name='input_ids', data_url='/cache/MindRecord_shuffle/', decay_steps=200000, device_id=0, device_num=1, device_target='Ascend', distribute='false', embedding_size=2560, enable_alltoall=0, end_lr=1e-06, end_token=9, eod_id=128298, eod_reset=1, epoch_size=1, eval_data_url=None, eval_steps=10, expert_num=1, expert_parallel_num=1, export=0, frequency_penalty=1.5, full_batch=1, gradient_aggregation_group=4, has_trained_epoches=0, has_trained_steps=0, hccl_connect_time=6000, incremental_training=0, keep_checkpoint_max=1, load_ckpt_name='mPanGu_Alpha-53_fp16.ckpt', load_ckpt_path='/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/', max_generate_length=500, micro_batch_interleaved=1, micro_size=1, mode='2.6B', ng_port='17290', num_heads=32, num_layers=32, offline=1, op_level_model_parallel_num=8, opt_offload=0, optimizer='adam', optimizer_shard=1, padding_id=128297, parallel_mode='data_parallel', param_init_type='fp16', per_batch_size=1, per_token_num_experts_chosen=1, pre_trained=None, presence_penalty=0.3, recompute_slice_activation=0, run_type='predict', save_checkpoint=False, save_checkpoint_path=None, save_checkpoint_steps=2000, seq_length=1024, sink_size=2, stage_num=1, start_lr=5e-05, strategy_load_ckpt_path='/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/mPanGu_53-26b-128k-m1d128-22m1d24-exp0-strategy.ckptstrategy.ckpt', tokenizer_path='/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/', top_k_num=3, top_p=1.0, train_and_eval_mode=0, train_url=None, use_moe=0, use_past='true', use_pynative_op=0, vocab_size=128320, warmup_step=2000, word_emb_dp=1)

[WARNING] ME(4513:281462233050336,MainProcess):2023-06-02-05:20:11.226.50 [mindspore/ops/primitive.py:207] The in_strategy of the operator in your network will not take effect in stand_alone mode. This means the the shard function called in the network is ignored.

If you want to enable it, please use semi auto or auto parallel mode by context.set_auto_parallel_context(parallel_mode=ParallelMode.SEMI_AUTO_PARALLEL or context.set_auto_parallel_context(parallel_mode=ParallelMode.AUTO_PARALLEL)

[WARNING] ME(4513:281462233050336,MainProcess):2023-06-02-05:20:11.624.470 [mindspore/common/_decorator.py:38] 'DropoutGenMask' is deprecated from version 1.5 and will be removed in a future version, use 'ops.Dropout' instead.

[WARNING] ME(4513:281462233050336,MainProcess):2023-06-02-05:20:11.624.799 [mindspore/common/_decorator.py:38] 'DropoutDoMask' is deprecated from version 1.5 and will be removed in a future version, use 'ops.Dropout' instead.

[WARNING] ME(4513:281462233050336,MainProcess):2023-06-02-05:20:11.628.558 [mindspore/common/parameter.py:599] This interface may be deleted in the future.

pipeline stage id is 0

Traceback (most recent call last):

File "predict.py", line 201, in

main()

File "predict.py", line 193, in main

model_predict, config = load_model(opt)

File "predict.py", line 115, in load_model

pangu_alpha = PanguAlphaModel(config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 390, in init

self.backbone = PanguAlpha_Model(config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 260, in init

softmax_compute_type=config.softmax_compute_type).blocks

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/log.py", line 625, in wrapper

res = func(*args, **kwargs)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/layers.py", line 67, in wrapper

return func(*args, **kwargs)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/transformer.py", line 2457, in init

offset=offset, parallel_config=parallel_config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 219, in set_parallel_configure_for_layer

if parallel_config.recompute.recompute:

AttributeError: 'bool' object has no attribute 'recompute'

具体问题具体分析:

if parallel_config.recompute.recompute:

AttributeError: 'bool' object has no attribute 'recompute'

这里训练和推理配置是有差异的,所以你可能需要把parallel_config.recompute.recompute修改为,

parallel_config.recompute,就想你在predict.py里配置的那样

parallel_config = TransformerOpParallelConfig(data_parallel=data_parallel_num,

model_parallel=model_parallel_num,

pipeline_stage=args_opt.stage_num,

micro_batch_num=args_opt.micro_size,

recompute=True)

按照您说的,我都修改了,然后又报出来新错误了

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

pipeline stage id is 0

Traceback (most recent call last):

File "predict.py", line 204, in

main()

File "predict.py", line 196, in main

model_predict, config = load_model(opt)

File "predict.py", line 118, in load_model

pangu_alpha = PanguAlphaModel(config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 392, in init

self.backbone = PanguAlpha_Model(config)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 297, in init

self.load_embedding_from_ckpt(config.load_ckpt_path)

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 355, in load_embedding_from_ckpt

self.embedding.word_embedding.embedding_table = Parameter(initializer(load_param(word_embedding_path),

File "/code/aisynergy/examples/mPanGu/src/pangu_alpha.py", line 346, in load_param

raise ValueError(f"{path} file not exits, "

ValueError: /code/mPanGu-Alpha-53/models/word_embedding.npy file not exits, please check whether embedding file exit.

请问word_embedding.npy文件我应该去哪儿下载?下载模型权重时没有看到这个文件

predict.py这里load_ckpt_path=None

修改之后,又出现新的错误:

pipeline stage id is 0

pipeline stage id is 0

[WARNING] ME(7265:281463861488864,MainProcess):2023-06-02-08:32:30.943.568 [mindspore/common/_decorator.py:38] 'GatherV2' is deprecated from version 1.1 and will be removed in a future version, use 'Gather' instead.

======start load_distributed checkpoint

Loading from path /code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/filerted_0.ckpt

Traceback (most recent call last):

File "predict.py", line 206, in

main()

File "predict.py", line 198, in main

model_predict, config = load_model(opt)

File "predict.py", line 148, in load_model

param_dict = load_checkpoint(args_opt.local_ckpt_path)

AttributeError: 'Namespace' object has no attribute 'local_ckpt_path'

然后,我试着将报错这行修改成param_dict = load_checkpoint(None) , 还是报错

pipeline stage id is 0

[WARNING] ME(6984:281464976911584,MainProcess):2023-06-02-08:26:54.889.09 [mindspore/common/_decorator.py:38] 'GatherV2' is deprecated from version 1.1 and will be removed in a future version, use 'Gather' instead.

======start load_distributed checkpoint

Loading from path /code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/filerted_0.ckpt

Traceback (most recent call last):

File "predict.py", line 206, in

main()

File "predict.py", line 198, in main

model_predict, config = load_model(opt)

File "predict.py", line 149, in load_model

param_dict = load_checkpoint(None)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/train/serialization.py", line 507, in load_checkpoint

ckpt_file_name = _check_ckpt_file_name(ckpt_file_name)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/train/serialization.py", line 567, in _check_ckpt_file_name

"but got {}.".format(type(ckpt_file_name)))

TypeError: For 'load_checkpoint', the argument 'ckpt_file_name' must be string, but got <class 'NoneType'>.

然后我试着直接修改成param_dict = load_checkpoint('/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models'),会报错如下

pipeline stage id is 0

[WARNING] ME(7823:281472502279392,MainProcess):2023-06-02-08:40:48.286.471 [mindspore/common/_decorator.py:38] 'GatherV2' is deprecated from version 1.1 and will be removed in a future version, use 'Gather' instead.

======start load_distributed checkpoint

Loading from path /code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models/filerted_0.ckpt

Traceback (most recent call last):

File "predict.py", line 206, in

main()

File "predict.py", line 198, in main

model_predict, config = load_model(opt)

File "predict.py", line 149, in load_model

param_dict = load_checkpoint('/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/models')

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/train/serialization.py", line 507, in load_checkpoint

ckpt_file_name = _check_ckpt_file_name(ckpt_file_name)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/train/serialization.py", line 570, in _check_ckpt_file_name

raise ValueError("For 'load_checkpoint', the checkpoint file should end with '.ckpt', please "

ValueError: For 'load_checkpoint', the checkpoint file should end with '.ckpt', please input the correct 'ckpt_file_name'.

1、请仔细查看我上一条的解答!!!

2、请根据报错原因分析解决问题,param_dict = load_checkpoint("/path/to/ckpt")

这里传的是你需要加载的模型文件的路径,后缀为“.ckpt”

这个问题改好了,但是JIEBATokenizer时,却遇到问题:

================load param ok=================

Traceback (most recent call last):

File "predict.py", line 206, in

main()

File "predict.py", line 202, in main

run_predict(model_predict, config, opt)

File "predict.py", line 179, in run_predict

os.path.join(args_opt.tokenizer_path, 'vocab10.model'))

File "/code/aisynergy/examples/mPanGu/src/tokenization_jieba.py", line 29, in init

f = open(vocab_file, 'r')

FileNotFoundError: [Errno 2] No such file or directory: '/code/mPanGu-Alpha-53/mPanGu_alpha_NPU/tokenizer/vocab10.vocab'

tokenizer文件路径下缺少vocab10.vocab 和 vocab10.model 两个文件。

https://openi.pcl.ac.cn/PCL-Platform.Intelligence/mPanGu-Alpha-53/src/branch/master/mPanGu_alpha_ms1.7/predict_exp22_integrated_inference.py#L167

参考上述,mPanGu使用的spm_13目录下的词表,加载对应词表文件

参考spm_13修改后可以正常加载词表,tokenizer没问题了,权重和tokenizer都正常加载进npu了,但是翻译时又报错了,按照指示的网站https://www.mindspore.cn/search?inputValue=analyze_fail.dat 也没搜到有用信息, 要去改src/pangu_alpha.py代码吗?

================load param ok=================

input_ids [83743, 29479, 119881, 122045, 121522, 119377, 11113, 119262]

np.array([src_input_ids]) [[128301 128301 128301 83743 29479 119881 122045 121522 119377 11113

119262 128300 128300 128300 128308 128308 128308]]

input_ids is 128301 128301 128301 ... 0 0 0

[ERROR] ANALYZER(13818,fffed7212ce0,python):2023-06-05-08:12:22.145.897 [mindspore/ccsrc/pipeline/jit/static_analysis/async_eval_result.cc:66] HandleException] Exception happened, check the information as below.

The function call stack (See file '/code/aisynergy/examples/mPanGu/rank_0/om/analyze_fail.dat' for more details. Get instructions about

analyze_fail.datat https://www.mindspore.cn/search?inputValue=analyze_fail.dat):0 In file /code/aisynergy/examples/mPanGu/src/pangu_alpha.py:492

1 In file /code/aisynergy/examples/mPanGu/src/pangu_alpha.py:397

2 In file /code/aisynergy/examples/mPanGu/src/pangu_alpha.py:311

3 In file /code/aisynergy/examples/mPanGu/src/pangu_alpha.py:397

4 In file /code/aisynergy/examples/mPanGu/src/pangu_alpha.py:312

5 In file /code/aisynergy/examples/mPanGu/src/pangu_alpha.py:313

6 In file /usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/transformer.py:1627

Traceback (most recent call last):

File "predict.py", line 245, in

main()

File "predict.py", line 240, in main

do_predict(model_predict, config, opt)

File "predict.py", line 223, in do_predict

output_ids = generate_func(model_predict, np.array([src_input_ids]), args_opt)

File "/code/aisynergy/examples/mPanGu/src/generate.py", line 233, in generate_increment

logits = model.predict(input_id, current_index, init, batch_valid_length)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/train/model.py", line 1465, in predict

result = self._predict_network(*predict_data)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/cell.py", line 619, in call

out = self.compile_and_run(*args)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/cell.py", line 1005, in compile_and_run

self.compile(*inputs)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/cell.py", line 977, in compile

jit_config_dict=self._jit_config_dict)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/common/api.py", line 1131, in compile

result = self._graph_executor.compile(obj, args_list, phase, self._use_vm_mode())

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/ops/primitive.py", line 716, in infer

return {'dtype': None, 'shape': None, 'value': fn(*value_args)}

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/layers.py", line 187, in _check_shape_equal

_LayerInputCheck.check_shape_equal(input_shape, param_name, func_name, target_shape)

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/layers.py", line 137, in check_shape_equal

[len(item) for item in target_shape])

File "/usr/local/python3.7.5/lib/python3.7/site-packages/mindspore/nn/transformer/layers.py", line 118, in check_shape_length

raise ValueError(f"{func_name} {param_name} shape length must be one of {target_len} dimension, "

ValueError: TransformerEncoderLayer x shape length must be one of [3] dimension, but got shape [1, 2560]