Are you sure you want to delete this task? Once this task is deleted, it cannot be recovered.

|

|

1 year ago | |

|---|---|---|

| .github | 4 years ago | |

| .teamcity | 1 year ago | |

| benchmark | 1 year ago | |

| docs | 1 year ago | |

| evo_kit | 3 years ago | |

| examples | 1 year ago | |

| papers | 2 years ago | |

| parl | 1 year ago | |

| test_tipc | 1 year ago | |

| .copyright.hook | 4 years ago | |

| .gitignore | 2 years ago | |

| .pre-commit-config.yaml | 1 year ago | |

| .readthedocs.yml | 1 year ago | |

| CMakeLists.txt | 1 year ago | |

| LICENSE | 6 years ago | |

| MANIFEST.in | 4 years ago | |

| README.cn.md | 1 year ago | |

| README.md | 1 year ago | |

| setup.py | 1 year ago | |

README.md

English | 简体中文

PARL is a flexible and high-efficient reinforcement learning framework.

About PARL

Features

Reproducible. We provide algorithms that stably reproduce the result of many influential reinforcement learning algorithms.

Large Scale. Ability to support high-performance parallelization of training with thousands of CPUs and multi-GPUs.

Reusable. Algorithms provided in the repository could be directly adapted to a new task by defining a forward network and training mechanism will be built automatically.

Extensible. Build new algorithms quickly by inheriting the abstract class in the framework.

Abstractions

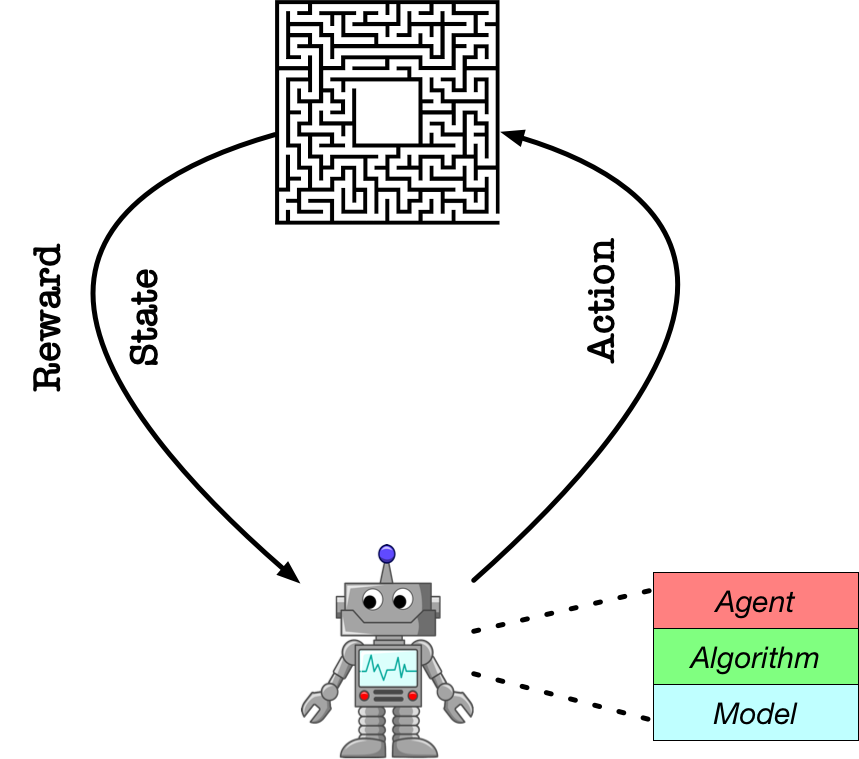

PARL aims to build an agent for training algorithms to perform complex tasks.

The main abstractions introduced by PARL that are used to build an agent recursively are the following:

PARL aims to build an agent for training algorithms to perform complex tasks.

The main abstractions introduced by PARL that are used to build an agent recursively are the following:

Model

Model is abstracted to construct the forward network which defines a policy network or critic network given state as input.

Algorithm

Algorithm describes the mechanism to update parameters in Model and often contains at least one model.

Agent

Agent, a data bridge between the environment and the algorithm, is responsible for data I/O with the outside environment and describes data preprocessing before feeding data into the training process.

Note: For more information about base classes, please visit our tutorial and API documentation.

Parallelization

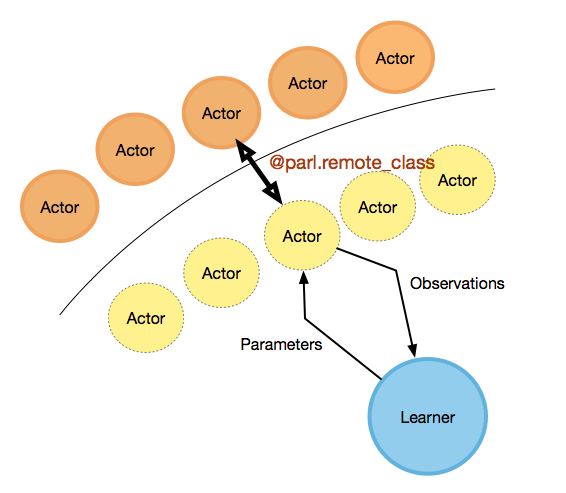

PARL provides a compact API for distributed training, allowing users to transfer the code into a parallelized version by simply adding a decorator. For more information about our APIs for parallel training, please visit our documentation.

Here is a Hello World example to demonstrate how easy it is to leverage outer computation resources.

#============Agent.py=================

@parl.remote_class

class Agent(object):

def say_hello(self):

print("Hello World!")

def sum(self, a, b):

return a+b

parl.connect('localhost:8037')

agent = Agent()

agent.say_hello()

ans = agent.sum(1,5) # it runs remotely, without consuming any local computation resources

Two steps to use outer computation resources:

- use the

parl.remote_classto decorate a class at first, after which it is transferred to be a new class that can run in other CPUs or machines. - call

parl.connectto initialize parallel communication before creating an object. Calling any function of the objects does not consume local computation resources since they are executed elsewhere.

As shown in the above figure, real actors (orange circle) are running at the cpu cluster, while the learner (blue circle) is running at the local gpu with several remote actors (yellow circle with dotted edge).

As shown in the above figure, real actors (orange circle) are running at the cpu cluster, while the learner (blue circle) is running at the local gpu with several remote actors (yellow circle with dotted edge).

For users, they can write code in a simple way, just like writing multi-thread code, but with actors consuming remote resources. We have also provided examples of parallized algorithms like IMPALA, A2C. For more details in usage please refer to these examples.

Install:

Dependencies

- Python 3.6+(Python 3.8+ is preferable for distributed training).

- paddlepaddle>=2.3.1 (Optional, if you only want to use APIs related to parallelization alone)

pip install parl

Getting Started

Several-points to get you started:

- Tutorial : How to solve cartpole problem.

- Xparl Usage : How to set up a cluster with

xparland compute in parallel. - Advanced Tutorial : Create customized algorithms.

- API documentation

For beginners who know little about reinforcement learning, we also provide an introductory course: ( Video | Code )

Examples

- QuickStart

- DQN

- ES

- DDPG

- A2C

- TD3

- SAC

- QMIX

- MADDPG

- PPO

- CQL

- IMPALA

- Winning Solution for NIPS2018: AI for Prosthetics Challenge

- Winning Solution for NIPS2019: Learn to Move Challenge

- Winning Solution for NIPS2020: Learning to Run a Power Network Challenge

PARL 是一个高性能、灵活的强化学习框架

Python C++ JavaScript Shell Markdown other