Deleting a branch is permanent. It CANNOT be undone. Continue?

Loading…

There is no content yet.

Delete Branch 'lzh_multihead'

Deleting a branch is permanent. It CANNOT be undone. Continue?

Dear OpenI User

Thank you for your continuous support to the Openl Qizhi Community AI Collaboration Platform. In order to protect your usage rights and ensure network security, we updated the Openl Qizhi Community AI Collaboration Platform Usage Agreement in January 2024. The updated agreement specifies that users are prohibited from using intranet penetration tools. After you click "Agree and continue", you can continue to use our services. Thank you for your cooperation and understanding.

For more agreement content, please refer to the《Openl Qizhi Community AI Collaboration Platform Usage Agreement》

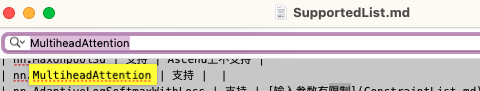

[WIP]nn.MultiheadAttentionto nn.MultiheadAttention 1 year ago用raise代替assert

已修改

这里为啥用ms.ops接口?

已修改

可以直接参考https://github.com/pytorch/pytorch/blob/master/test/test_nn.py里的test_multihead_attn_add_zero_attn等用例进行功能完备性测试

补充了test/nn/test_multihead_attention.py里的用例

这个todo是什么功能?

NonDynamicallyQuantizableLinear是torch引入用来规避一个不常见error的,ms没有实现过,直接用普通Linear替代了

如果上面调用的multi_head_attention_forward是mindspore接口,这里的attn_output是mindspore tensor吧?

supportedList里已有

aeae9881d5.